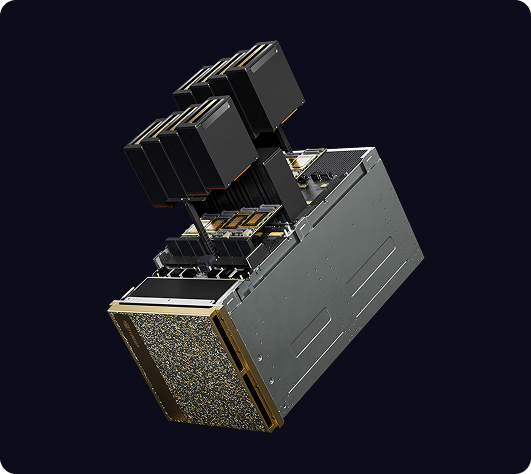

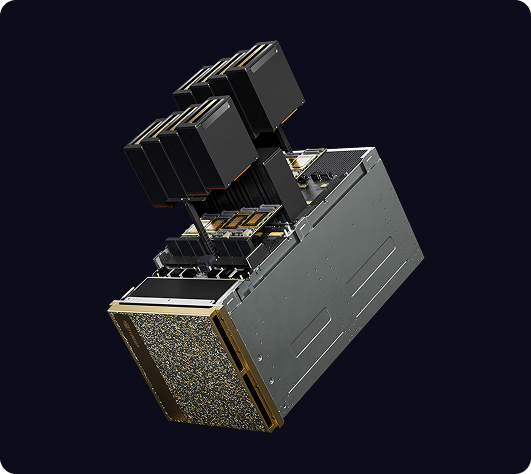

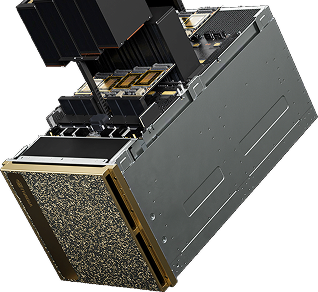

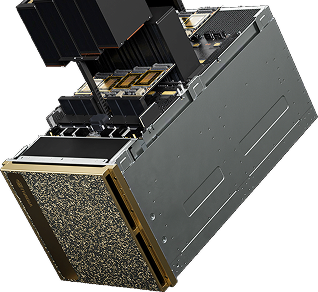

NVIDIA B200 System

Accelerating AI: Exceptional Training and Inference

Unmatched Performance

Scalability

Expert Support

Advanced Memory Technology

Equipped with 192GB of HBM3e memory per GPU, connected via a 4096-bit interface, it achieves a remarkable memory bandwidth of 8 TB/s. This high-speed memory configuration enables the B200 to handle massive datasets and complex models with ease, accelerating workflows in AI training, inference, and high-performance computing

The substantial memory capacity and bandwidth reduce bottlenecks, ensuring quicker results and empowering organizations to tackle their most demanding data challenges efficiently.

Enhancing Data Analytics Performance

Supporting the latest compression formats like LZ4, Snappy, and Deflate, the B200 delivers up to 6X faster query performance compared to traditional CPUs and 2X the speed of NVIDIA H100 Tensor Core GPUs

This exceptional capability accelerates workflows such as predictive modeling, real-time streaming, and large-scale data processing, making it an ideal choice for organizations demanding faster insights and unmatched efficiency.

On-Demand AI Power:

Get the AMD Instinct MI300X GPU as a Service

Harness the power of the AMD Instinct MI300X GPU without long-term commitment. Rent for ondemand access to cutting-edge AI and HPC performance, optimized for flexibility and cost efficiency with pricing at $2.51 per GPU per hour on a two-year commitment. Shorter terms are available.